When looking at all of the different ways that we can interact with the systems that exist within the realm of IT today, we’ve all stopped at some point in time and realized how painful it was to use a product that is being sold by X vendor. We have different ideas and ways that we think the user experience of this product should flow and we’re disappointed that we can’t use it in the logical order in which we’ve interpreted for this particular operation. Whether it be CLI, API, GUI, etc. – we’re always upset that the experience just isn’t there. It doesn’t meet _our_ needs.

Stepping back and taking a look at the world around us, we’re not at all thrilled with the overall user experience of anything that we use today. We’re constantly complaining about where button Y is located, or why knob Z isn’t in the location that we consider logical. A good example is the location of the unlock door button in my Chevy Cruze that I bought last year. I’ve been programmed, over the last 15 years, in understanding that the door unlock button was located ON THE DOOR, and in the case of the Cruze (and other new makes and models) – they’ve relocated the door unlock button to the center console. Now, every time I go to lock and unlock the doors of my vehicle, I’m reaching to the wrong place at the wrong time. This is what I would call bad user experience. I would also call this a terrible example of first world problems, but I will use the illustration none the less. It doesn’t fit the logic that I have been accustomed to over the past decade and a half.

Something as simple as the location of a button has caused a small amount of grief for me when operating my vehicle. Can I still use the vehicle? Sure, but its going to take some getting used to and modifying my ‘unlock car door’ workflow. Stepping back and looking at the bigger picture, and understanding that the next generation of kids who inherit these cars as their first modes of transportation, this will be the way they understand the location of that single button within the referential space of the vehicle. Not on the door like our generation understands it. We both have different interpretations, either preconceived or unbiased through the use of the product.

UX is a fluid concept, not something that’s etched in stone. It’s entirely subjective to the individual who is actually using the product. And that subjectiveness is usually driven by some sort of perspective that the user has built, over time, on how something should look, feel and operate within the logical constraints of how they, personally, view the system.

We’re not concentrating on the fact that everyone interprets UX differently. We’ve talked before about how the network world is largely workflow driven, but we haven’t taken the time to understand that everyone has small nuances to how they perform a certain workflow. There are a certain set of atomic constructs that we use to accomplish a certain task, and we all string them together a bit differently throughout the execution of our workflow. This is why we think that UX sucks on every product out there. It isn’t entirely customized to our logical interpretation of how that task should be accomplished.

When talking about defining the workflows that we need to with respect to administrating an IT infrastructure, the same atomic elements are used when creating that workflow. VLAN X needs to propagate throughout Y infrastructure and connect Z nodes to comprise whatever system it is that we’re building. Now the nuances of that particular workflow come into play. We may see it fit to ensure that the VLAN has been created through a sort of show command or log parse before moving on to step 2, or we may not. We may want to publish that information to another system other than validating it through the platform that we’re working with currently. And that’s just service instantiation, that doesn’t include validation, troubleshooting, tearing a service down, etc.

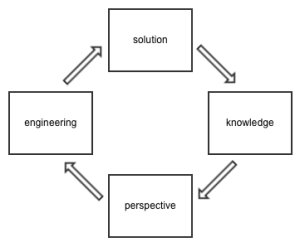

Everyone interacts with these systems in a different manner and we all bring certain nuances, and history, to the table through those interactions. It would be best to be able to provide a user defined UX that fits, precisely, the workflow that a particular user is looking for. A workflow modeling tool that allows you to manipulate the atomic tasks that are well defined, but allow you to define how, when, and where, and in what order to use them. This will provide you with the user experience that everyone is craving within IT today.